RGB-only real-time gesture recognition for screen control

Real-time gesture control is often blocked by heavy models, depth sensors, or latency. This project targeted robust gesture recognition from RGB only, with an explicit real-time constraint, using hand landmarks plus a lightweight temporal model.

I led data collection and preprocessing, built the dataset pipeline end-to-end (RGB to MediaPipe landmarks to cleaned training tensors), and implemented and validated the first half of the system so training and evaluation were stable across both the benchmark and our custom dataset. I also supported experiment setup and analysis to ensure the data pipeline behaved reliably under “clean” and “messy” conditions.

The final system achieved 88.84% accuracy at 18.85 ms latency on IPN Hand dataset, and 98.85% accuracy on the messy screen-control dataset after fine-tuning.

Keywords: computer vision, HCI, temporal CNNs, edge-friendly inference

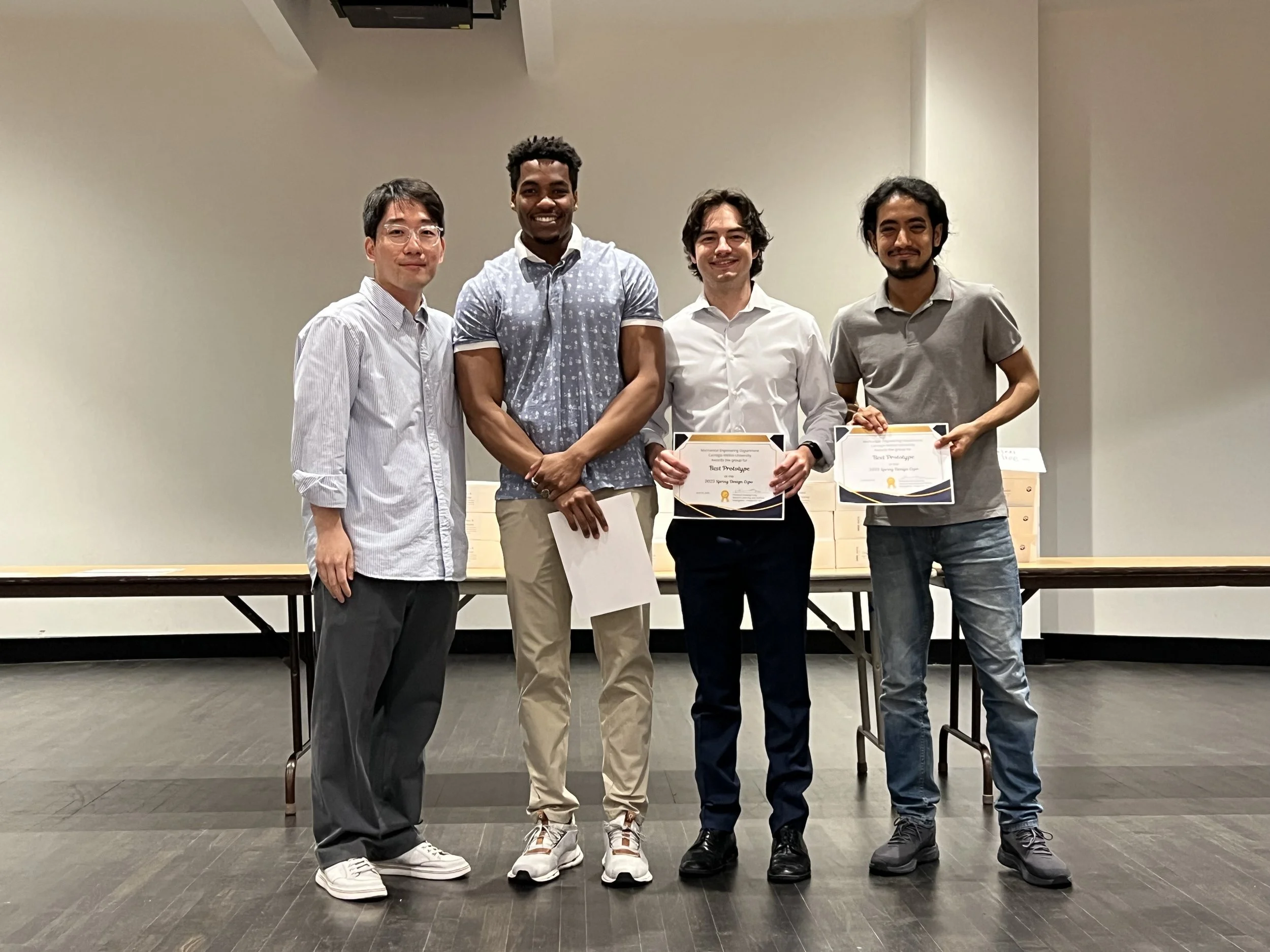

Recognition: Best Prototype, CMU MechE Spring Design Expo (24-788)